(I really mean it when I say ramblings, I’ll try to clean up in the future).

I started working with mostly LLM’s as an amateur (still am an am, actually). It was grueling work and cost me a lot of money and time (more time than money). I was only fine tuning and wow did it take a long time for a little return- tuning itty bitty hyperparameters with Kohya wasn’t going to fly for what I wanted to do. I am only talking about 2023, not like 2015. Media was even further off. However, after working on some projects with the NFL I realized that if you were very careful you could AWS, ffmpeg, Transformers, and etc to get some work done without getting in trouble for spend. Luckily we had a co-sponsorship so AI/ML was was largely covered.

Today I think it’s a lot easier with quantizations and smaller models “SLM’s” (edge models, Moondream2 for LLM, Qwen 2.5VL for Multimodal LM and say Wan 2.2 5B for strictly Vision, etc) and people adopting LoRA’s and largely for amateur work (e.g. I have a few 4090’s in a server) the idea of doing anything but low rank adapter training isn’t very popular with poor people. A ton of people have built amazing tools on Github in the last few years that have literally dropped the barrier to entry down to a person with a 3090Ti. I mean that’s an entry level consumer GPU these days.

That’s great. I really really respect people (technology aside) that build things that make things more accessible. Henry Petroski, of whom was a Duke prof of Engineering, wrote a bunch of books on engineering failure for laymen. And I am a layman when it comes to mechanical and structural engineering. So he made much more complex topics more accessible and I just think that’s awesome because we simply can’t know it all. We can’t be excellent at everything..At least I can’t. ..If we have a curiosity and someone else is capable of distilling down something we may not not have enough “life” time to truly understand, i’ll take the condensed version. In a way taking things in like a distilled model.

Multimodal has always been attractive to me due to my interest in media and specifically, music (and playing, engineering it). Some years ago I was talking to a guy on HF that created a music POC that would generate music from trained wav files and although it sounded pretty bad, it was super cool to me and you could see how it worked (and didn’t).

Music.

AI generating music, sounds painful to listen to. It was not because I thought AI stood a chance at making music I wanted to hear, nor did I really want it to, but that it could generate music that was at least listenable seemed amazing to me. This is more than just understanding what is harmonic and melodic and nice to hear because that’s not extremely difficult to pair scales and notes together to produce “nice” sounding stuff. If it had a taste of the importance of arrangements and modes, and etc maybe it could product something interesting. Anyway, super cool stuff. This is on my very, very long list of things to get into. I started thinking on using AI for real-time audio compression and life got in the way. I made more

Vision.

I got distracted from Music when Stable Diffusion came out. I remember when I first came across 1.4 (around Halloween I think) and I was amazed at how easily I could generate terrifying images (of which I ended up printing on transparencies and projecting to the chagrin of neighborhood kids). That set my mind ablaze with other ideas, especially w/r/t shortcutting long and tedious processes. Something I’ve always loved. Some people just call it automation…

Models instead of filters

TBD model examples: Bleach bypass, Kodachrome, APX100, VectorGFX, etc.

Storm chasing and intelligent flea market eyes.

(New Vision models in the real world)

The main driver that got me obsessively into Multimodal and what can dramatically drive my motivation is if I can do tech outside of the house- If only I could use tech along with being not inside a computer all day, hence RV. This was absolutely key as I’ve very much uninterested in staring at a screen all day, especially when not-Summer. A lot of the tech I was/am interested in is stickier to me if I turn it into a relatable project. E.G. Helping botanists differentiate two types of very very VERY different plants (one being something not to put in your salad) for their students.

I sort of liken this collecting of stuff, maybe also referred to as hoarding, to “field recordings” which is an homage to Alan Lomax in my mind. This is something that I loved when Tornado chasing. We’d go chasing, gather a ton of info, maybe or maybe not see a tornado (probably not) and capture a bunch of windfield/doppler/pressure etc information to squirrel away and come back to the nest to crunch on.

When chasing out in the Plains or wherever, at the end of the day we’d find some cheap-as-possible motel and we’d go through all this data, signatures, CAPE, SR/H etc (which in many cases had to be pulled with acoustic couplers (modems) attached to payphones with velcro, (hello LAPM to the rescue)). We would paint a picture of what happened (or didn’t happen), learn from it, and ideally use that information to figure out where to set up next. To me that was just about as awesome as I could ask for. Marrying all these bits and pieces into something at least somewhat less chaotic was attractive, and learning hands-on all the while, seeing the very, very flat United States and etc. I digress. Whew.

Swainsboro Flea Market

Goal: Use AI to find valuable stuff.

The flea market part was practical. Walking through the flea market in lovely Swainsboro GA one Fall day I noticed the objects were impossibly small and most had price tags that are/were slightly readable, maybe. (could there be an app to OCR in real-time for people with bad vision?). All of the stuff in this joint was on consignment so nobody’s there watching over their stuff usually. It’s a fools errand to ask the store staff about a particular booth or object in a booth. Anyway, This also means all of the objects for sale are generally sorted and labeled by different people. Different setups, booths, fonts, handwriting, lighting, and etc. I had my phone and said to myself hmm- What if…At the time most Vision models were at most trained on images 336x for detection. That’s way north of 1100x+ today with bleeding edge VLM’s.

Idea: Automagically describe the object in detail and the price, if available.

Doing the math it looked like my very big, old, and seriously 5 pound Nikon D4 at ~40MP would need to be at about 1′ from the object to get a solid and sharp 336x crop- That’s purely optical which was ideal of course. That’s not practical. But let’s move on anyway with our Pixel 8 camera just to see what we see…

In my experience with transcription it either works or fails spectacularly, hallucinates, or argues with itself indefinitely </think>. A model needs to be trusted to work correctly before you start telling it do to important stuff, obviously. And you have to check its work even if it’s the best out there, or course. That said, It may not be critical for my use cases, but say in medicine where lives may be on the line, yeah. Or maybe we shouldn’t be doing that at all right now. Already a lot of work being done to process xrays and MRI’s using AI, check out this VAE. By the way..

Llava 1.6 was not up for this task which seemed to be the best open source model out there at the time…At the time, a mere two years ago, it was equivalent or better performing than commercial vision models.

I shelved this for a while, like 6 months, and then Qwen 2.5VL appeared. Insert wavy time lines and fadeout..

Recalibrate and test what we can do today.

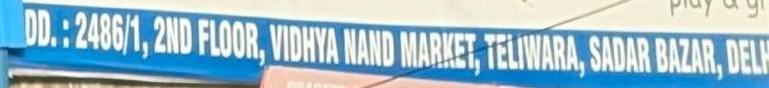

A quick test as I don’t have any of my own very large images with text a half mile away. From Pexels (~38MP). This is a compressed file below at moderate jpeg compression.

Note that I resized the original image to 1344x (maximum size for this model) for 32B to get even tiles. 28 tiles.

Transcription:

“The text reads: “DD.: 2486/1, 2ND FLOOR, VIDHYA NAND MARKET, TELIWARA, SADAR BAZAR, DELP”“

Ok good. It works well, mangled the H because it was cut off, no problem. Back to the flea market.

And, ran out of time. bbl.

Notes:

max 448-896 short side, Qwen 2.5VL

Qwen 2.5VL 32B 1344x ideal (square)

Resolution height 24-32px 32 high accuracy common fonts from testing.

TBC