Speed.

Lucky enough to be in a place right now where fireworks (all fireworks) are 100% legal which is good for me but…bad for dogs. Every year I re-remember that sparklers are considered the most dangerous firework because they’re considered by the general public to be the least dangerous. …

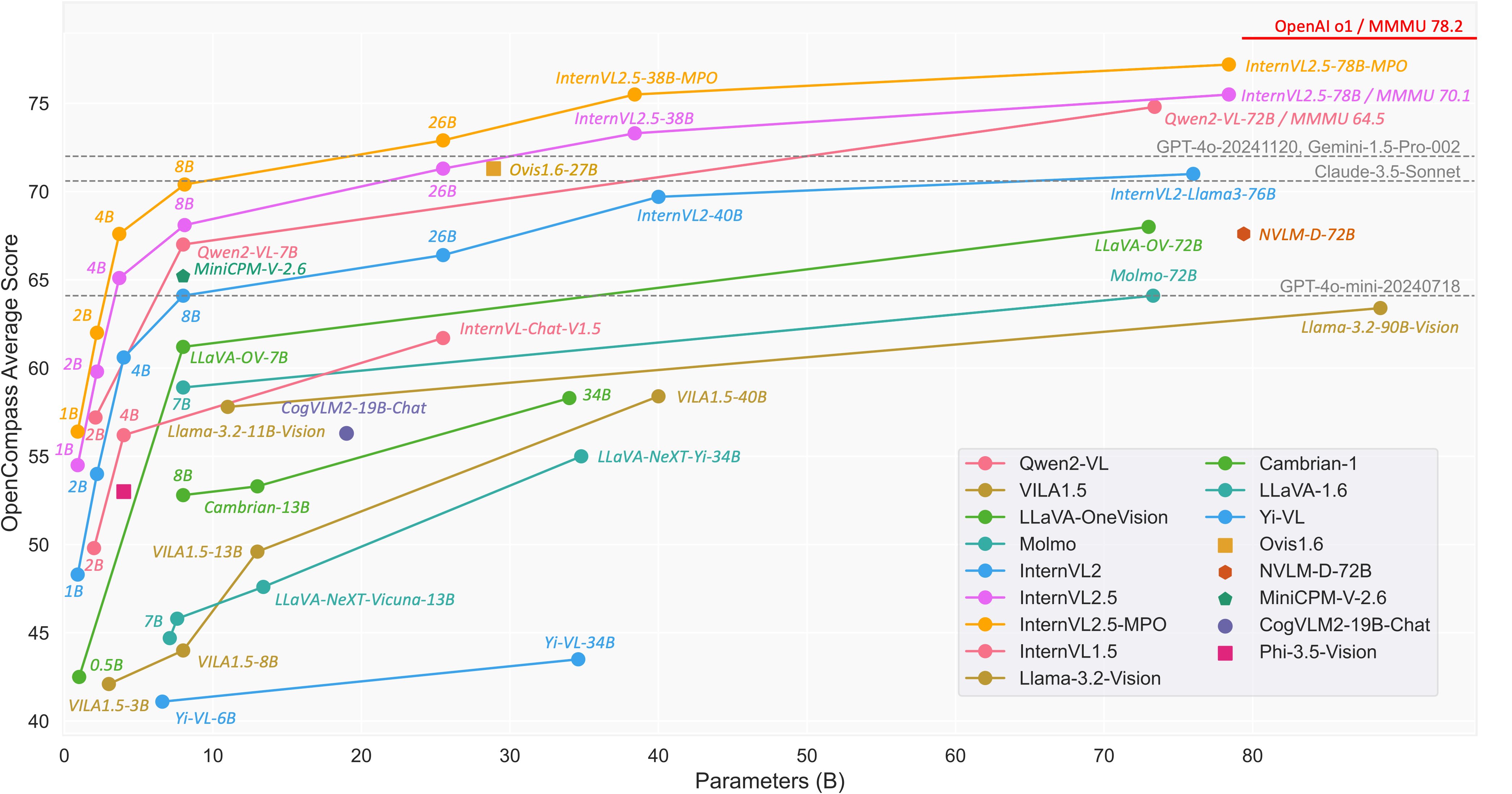

Too many models

In under 6 months, since at least the beginning of the year, there has been an incredible amount of growth in AIML, especially w/r/t model building. A lot of it is hype, fomo, and whatever, but most of it is organic interest that is focusing millions of eyes on the challenge.

Multimodal models have gone from LLava 1.5/1.6 to Intern/Qwen 2.5 VL in a year! The differences and improvements are generational in change and nature. Get it? …mmhm.. Have you inferenced with Intern VL 72B lately? The MPO versions of It’s absolutely ridiculous how good it is in image to text. Some of the more recent MOE LLM’s are insanely good and SMALL (7b!?). Huihui showed up and abliterated practically every model on HF.

Skyreels, WAN, Hunyuan- Video gen models are coming out monthly. Playing with them all to a small extent it seems WAN is where I’d put my money on (at least for this week). ComfyUI more and more is putting out releases that are natively supporting many of these models. A MM model I hadn’t even heard of until today (Omnigen2) was released natively to ComfyUI, today.

Inference speed on consumer GPU’s for video has gotten so good with Teacache, CFG Zerostar with CFGdistill and associated LoRA’s that 720x480x24 @ 5 seconds is a sub 2 minute task.

So much credit to open source. llama.cpp, the bloke (remember him?).

Happy 4th.